Japan Weekly BCN Highlights the Defensive Shield for Generative AI: CyCraft's Two Cybersecurity Solutions

※This article is translated from the Japanese “Weekly BCN,” entitled “生成AI時代の重大脅威の「盾」となる二つのセキュリティーソリューション.” For the complete original text, please refer to Weekly BCN+, November 24, 2025.

Generative AI (GenAI) cybersecurity threats have drawn widespread concern, among which Prompt Injection attacks are the most critical. With AI expertise, CyCraft, a Taiwanese cybersecurity enterprise, has developed defensive shields against related threats. We interview Sangwook Kang, Country Manager of CyCraft Japan, to gain insights into how their new solutions, XecGuard and XecART, leverage the convenience of GenAI while preventing its misuse.

Prompt Injection Threats: Hijacking Generative AI with Malicious Prompts

While GenAI promises creativity and productivity for both enterprises and individuals, its related threats are coming into sharp focus. Beyond the issue of AI hallucination which generates false information, cybersecurity risks of GenAI have rapidly escalated, including Prompt Injection, which generates false output or improperly manipulates systems, and Jailbreak, which evades ethical morals and safety mechanisms or exposes confidential information. These techniques improperly control GenAI all through subtle interactions.

In 2025, Open Worldwide Application Security Project (OWASP), a NPO that regularly reviews web application vulnerabilities, released its Top 10 GenerativeAI security risks list. Prompt Injection ranks first on this list.

However, it is relatively difficult to develop corresponding solutions. Inputs of GenAI or LLMs are inherently opaque, similar to a black box. This complexity makes it too hard to control, given that identical inputs can yield different outputs depending on contexts and scenarios. Consequently, only through continuous verification of both inputs and outputs can the validity of solutions be guaranteed.

Guarding over AI Applications, Scrutinizing for Suspicious Prompts

Mr. Kang believes that "while actively utilizing GenerativeAI, it is crucial to also enhance its safety." The key lies in how to enhance AI safety without compromising its performance. He points out, “Overly restricting AI for the sake of protection would only impair its creativity and dialogue capability; conversely, too much freedom might increase risks. The balance is extremely important.” It is based on this principle that XecGuard and XecART were conceived.

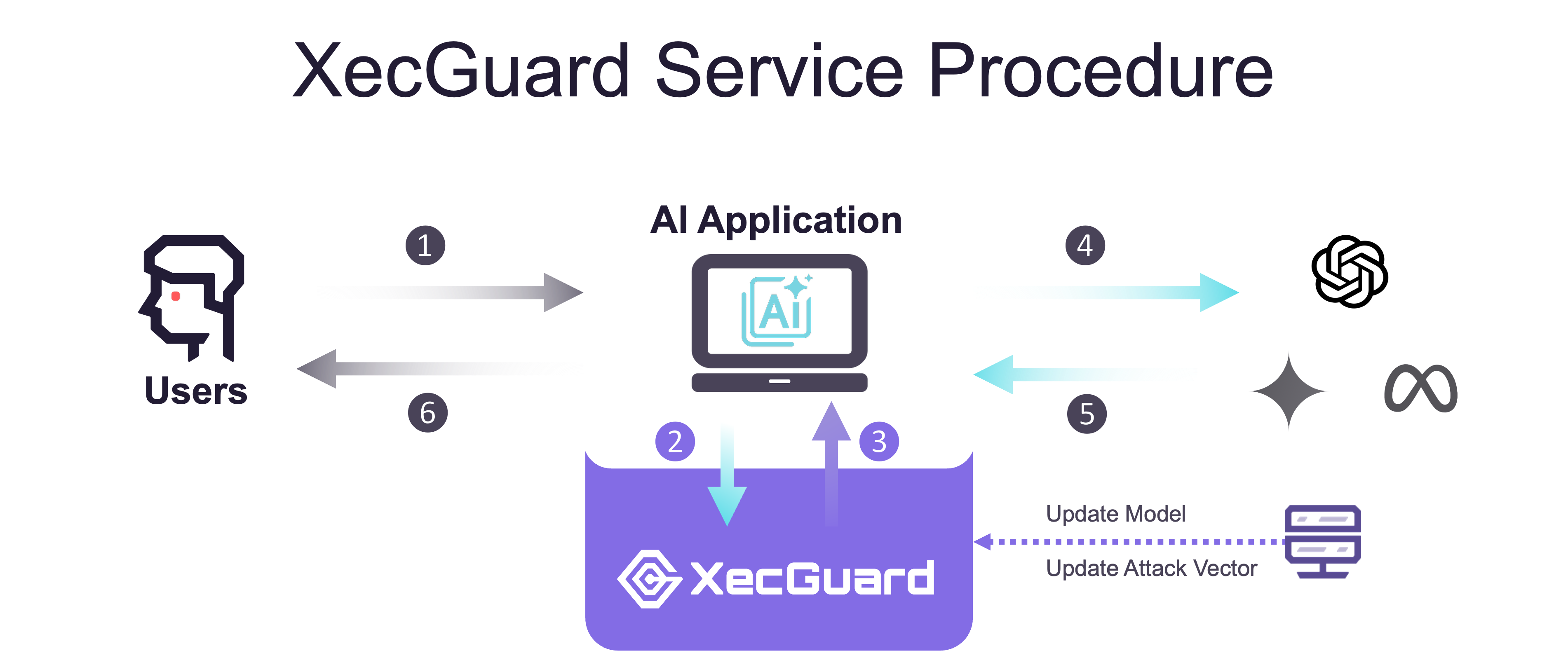

XecGuard is an easily deployable Guardrail security model. It is implemented alongside current AI applications, and not only detects malicious contexts but also integrates with context instruction-following capabilities to understand the rules of individual applications and identify the context that attempts to violate or circumvent these rules.

When an AI application receives a prompt, it would be first evaluated by XecGuard. Any suspicious prompt input or requests deviating from the guardrails are immediately blocked. XecGuard supports major LLMs, including OpenAI and Gemini, and requires no modification to the AI application itself.

Furthermore, XecGuard operates at millisecond speeds, ensuring no impact on the AI application's performance. Much like a celebrity's PR manager at a press conference, XecGuard instantly interrupts the dialogue when a user inputs a malicious, coercive prompt, thereby protecting the AI application.

Assessing AI System Security from an Attacker's Perspective and Complying with International Standards

XecART is a security assessment service of GenAI systems. Its development stems from the demand of clients in the financial and public sectors who seek “third-party verification of AI systems” and “periodic security confirmation as part of internal controls.” XecART is positioned as the GenAI equivalent of traditional penetration test and Red Teaming, specifically designed to evaluate defenses against Prompt Injection.

XecART not only diagnoses the system’s resilience to external attacks but also comprehensively assesses the robustness of authentication and the system's ability to handle anomalies, thereby enhancing overall defensive capability. It generates compliance audit reports of AI system safety based on guidelines from OWASP, ISO, NIST, and various national regulatory organizations, assisting enterprises adhere to international standards.

The service procedure of XecART typically begins by gathering information such as the AI chatbot's APIs and connection methods. The testing, reporting, and recommendation usually take approximately two to four weeks. Testing scenarios are dynamically adjusted based on the specific characteristics and intended use of the AI.

Mr. Kang explains, "XecART visualizes and quantifies security vulnerabilities, while XecGuard adjusts protective measures of AI operation with instant deployment." Regarding future developments, he states that "CyCraft Japan is committed to realizing a secure AI society. We hope to collaborate with like-minded partners to jointly create a defense model suited to the AI era, thereby enhancing enterprises' overall cyber resilience."

Further Reading

- CyCraft Hosts First Taiwan-Japan Cybersecurity Forum, Paving a New Blueprint for Growth

- CyCraft Launches XecGuard: LLM Firewall for Trustworthy AI

- A First Look: An Initial LLM Safety Analysis of Apple's On-Device Foundation Model

關於 CyCraft

奧義智慧 (CyCraft) 是亞洲領先的 AI 資安科技公司,專注於 AI 自動化威脅曝險管理。其 XCockpit AI 平台整合 XASM (Extended Attack Surface Management) 三大防禦構面:外部曝險預警管理、信任提權最佳化監控,與端點自動化聯防,提供超前、事前、即時的縱深防禦。憑藉其在政府、金融、半導體高科技產業的深厚實績與 Gartner 等機構的高度認可,奧義智慧持續打造亞洲最先進的 AI 資安戰情中心,捍衛企業數位韌性。

.jpg)