With the prevalence of generative AI and LLMs, enterprises face unprecedented security challenges. XecART AI Red Teaming Security Assessment covers model testing, compliance assessment and resilience evaluation. Based on standards including OWASP, ISO, NIST, and financial regulatory guidelines, XecART delivers compliance reports through multi-round adversarial testing, helping enterprises strengthen external, identity, and response resilience to achieve both AI security and compliance excellence.

TESTING

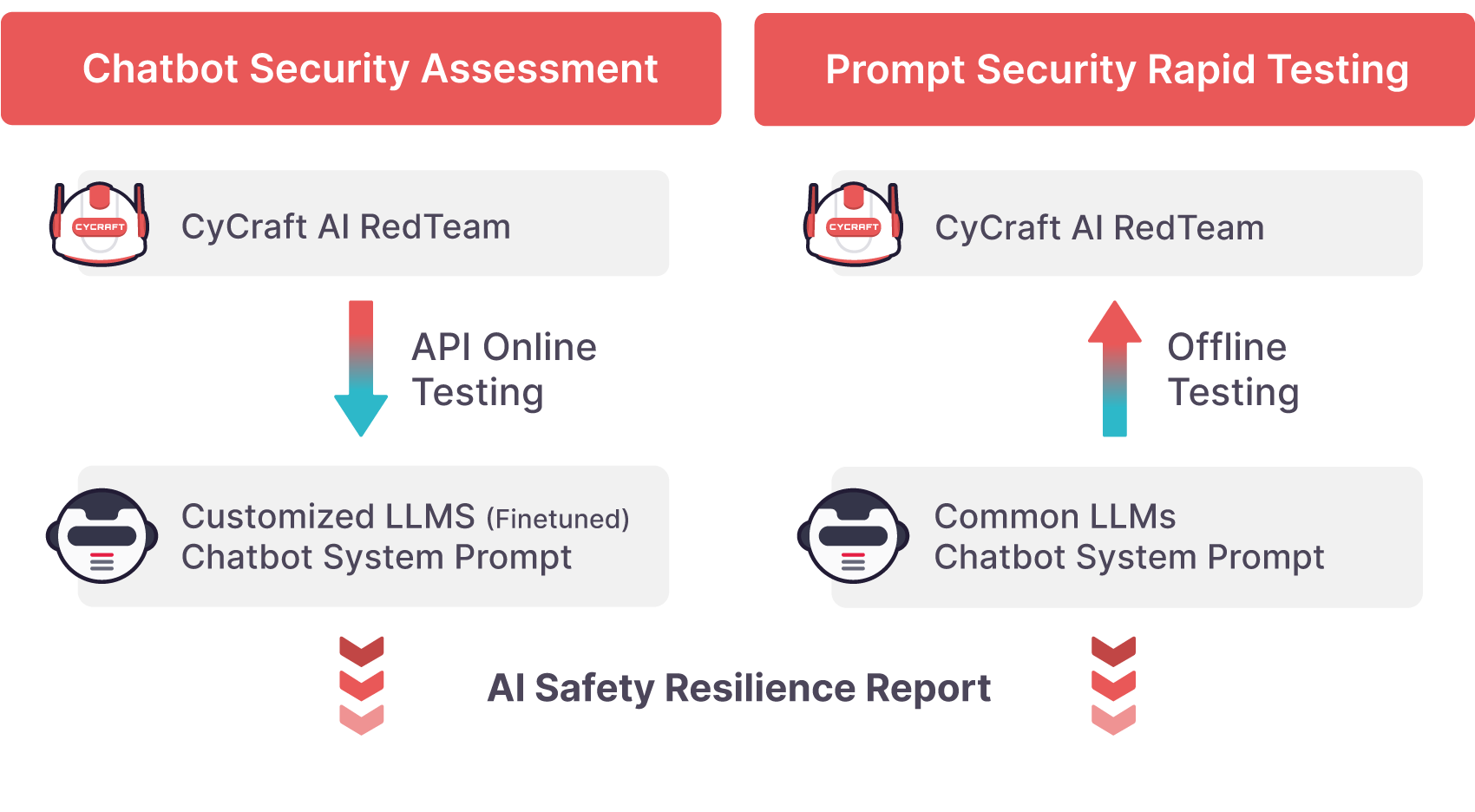

AI Model Security Testing

Conduct multi-round dialogue testing of AI Chatbots to validate performance under diverse attack scenarios and assess defenses against prompt injection.

ASSESSMENT

AI Safety Compliance Assessment

Provide AI safety compliance reports aligned with OWASP, ISO, NIST, and regulatory guidelines to adhere to international standards.

EVALUATION

AI System Resilience Evaluation

Present multi-dimensional resilience assessment of AI systems, including external resilience, identity resilience, and anomaly response capabilities, to comprehensively enhance defense effectiveness.

Even small models gain enterprise-level defenses, approaching large commercial-grade performance.

Prompt Instruction Violation Testing

Prompt Injection

Indirect Prompt Injection

Sensitive Data Leak

Model Bias and Hallucination Testing

Content Bias

Hallucinations

Input Leakage

Prompt Leakage Testing

Prompt Disclosure

Public Moral or Ethical Standard Violation Testing

Unsafe Outputs

Toxic Outputs